There are no items in your cart

Add More

Add More

| Item Details | Price | ||

|---|---|---|---|

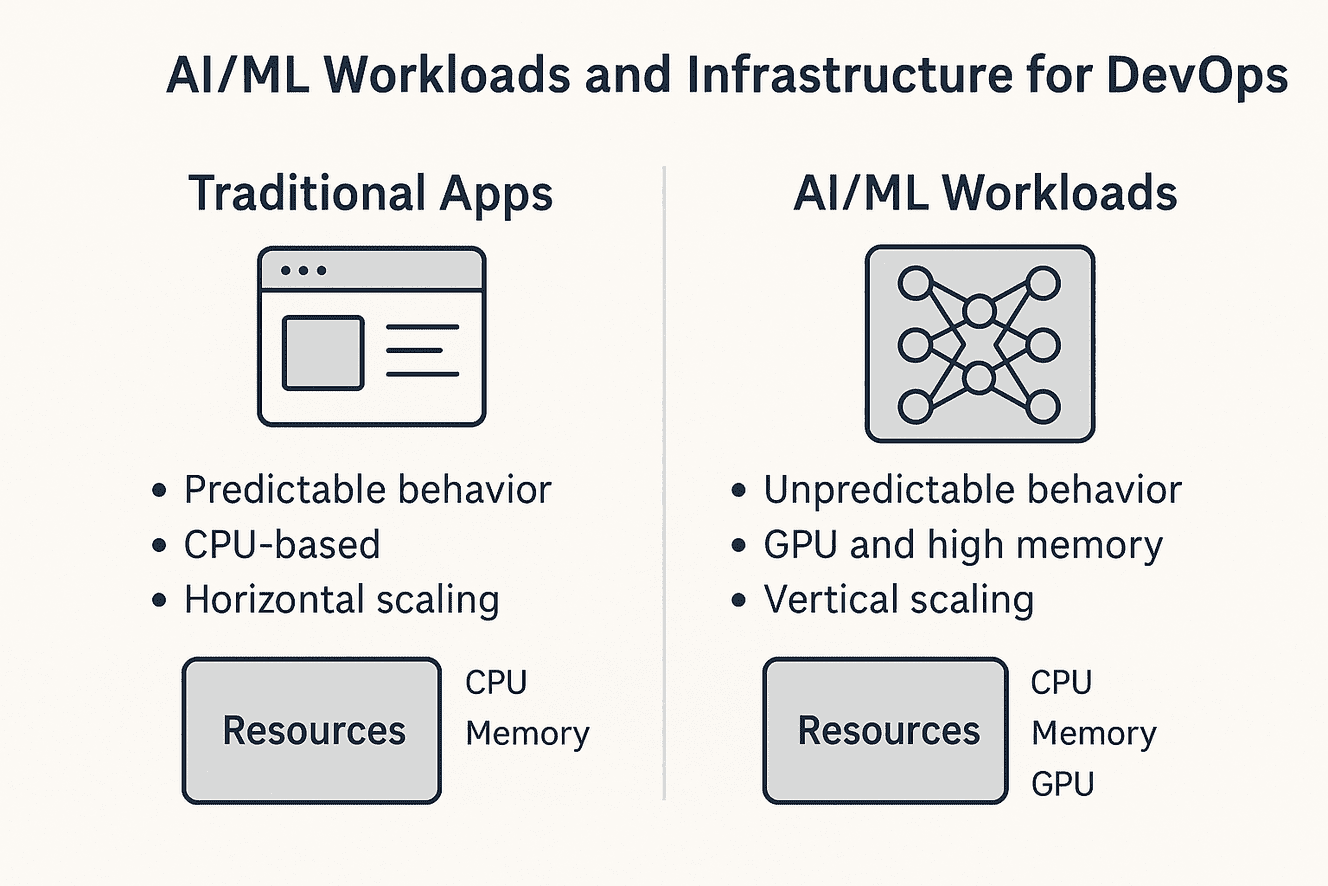

"Explore why AI/ML workloads require a different approach in DevOps, focusing on resource allocation, scaling, and infrastructure management challenges."

As a DevOps engineer, you're used to managing containers, scaling infrastructure, and deploying microservices. But what happens when AI models enter the picture? Suddenly, everything you know about infrastructure and scaling feels a bit off. Why does a text-processing AI need 32GB of RAM? Why does deployment take minutes instead of seconds?

In this post, let’s explore the key differences between traditional apps and AI/ML workloads, and how they affect DevOps practices.

AI models, especially Large Language Models (LLMs), can be massive—some need 32GB of RAM just to run. Unlike traditional apps that use CPU and scale horizontally, AI models require GPU and huge amounts of memory to load and function.

Model initialization is slow—while traditional apps start in seconds, AI models can take minutes to load. This is why pre-warming your models and keeping them ready to serve is essential.

Netflix doesn’t just recommend movies based on simple tags. It uses AI to analyze viewing history, time patterns, and other data points. This requires distributed systems and infrastructure that can handle huge amounts of data in real-time.

AI/ML workloads are complex, but they don’t have to be intimidating. With the right infrastructure and scaling strategies, you can handle AI models as smoothly as any traditional app. Embrace the new mindset—AI is just a different kind of challenge that DevOps can conquer!

theopskart

Roshan Kumar Singh is the founder of TheOpskart and a passionate DevOps + AI Evangelist. With over a decade of experience in building scalable cloud infrastructures and automating DevOps pipelines, Roshan is dedicated to helping professionals bridge the gap between traditional DevOps and the rapidly growing world of AI/ML. He shares insights, practical tips, and hands-on solutions to empower the next generation of DevOps engineers and tech enthusiasts.